Last time we talked about Batch inserts, we explored a way to achieve that using Go’s SQL standard library[1]. While this approach works well, it’s not the only option available out there. As good engineers, we like to explore our chances and go with the most efficient and reliable solution.

For that matter, we will be exploring a great alternative to this problem through the use of GORM, an ORM for Go that offers interesting features to your Go applications.

To highlight its ability to handle batch inserts, we will be comparing this one with the previous approach we used. We will be using the same scenario to run our benchmarks[2], creating a batch of contacts to save them in a Postgres database.

To start things off, let’s see an overview of what our benchmark has in store.

Benchmark Overview

Our application’s repo can be found at https://github.com/wawandco/gorm-batch-insert and it is simply a Go application with two major entities, Gorm Manager(gorman) and GoSQL(gosqlman). Each manager has access to a connection to the database using their corresponding package(gorm - database/sql~ which we’ll refer to as go/sql from now on) and a method which allows them to save contacts in batches.

In addition to those, we also have two benchmark tests, one for each approach, both in the same package. As you know, we’re not allowed to have tests called the same in the same package, hence why we have one of them commented. This is because we’ll be using benchstat, a Go tool to compare benchmark results. Since we’ll be doing this in the form of an A/B comparison then we’ll be using the same name and package to hold both these benchmarks.

Now, let’s take a look at the way we’re setting up the connection for each approach and the way we’re saving those records in batches.

The go/sql Way

Starting off, we have our setup function which starts a connection to our notebook(local) database, like so:

func setup() (*sql.DB, error) {

dbUser := os.Getenv("DB_USER")

dbPass := os.Getenv("DB_PASSWORD")

dbHost := os.Getenv("DB_HOST")

dbPort := os.Getenv("DB_PORT")

dbName := os.Getenv("DB_NAME")

dbURL := fmt.Sprintf(

"postgres://%s:%s@%s:%s/%s?sslmode=disable",

dbUser, dbPass, dbHost, dbPort, dbName)

conn, err := sql.Open("postgres", dbURL)

if err != nil {

return nil, fmt.Errorf("failed to start database with sql package: %w", err)

}

return conn, nil

}

Simple and straightforward. Now, this database connection is later then saved as part of a goSqlBatchManager which will be in charge of saving contacts in batches. This operation looks like this:

type goSqlBatchManager struct {

Conn *sql.DB

}

func NewGoSQLBatchManager() (goSqlBatchManager, error) {

conn, err := setup()

if err != nil {

return goSqlBatchManager{}, fmt.Errorf("failed to start a gosql batch manager: %w", err)

}

return goSqlBatchManager{conn}, nil

}

That is the function we will be calling from our benchmark to initialize our go/sql manager and perform our test. Now, the remaining piece of the puzzle is the batch manager method we will be using to save contacts in batches, which is the following one:

func (gsqbm goSqlBatchManager) SaveContactsInBatches(contacts internal.Contacts) error {

contactGroups := contacts.SplitInGroups(internal.BatchSize)

for _, contacsG := range contactGroups {

var (

placeholders []string

vals []interface{}

)

for index, contact := range contacsG {

placeholders = append(placeholders, fmt.Sprintf("($%d,$%d,$%d)",

index*3+1,

index*3+2,

index*3+3,

))

vals = append(vals, contact.FirstName, contact.LastName, contact.Email)

}

txn, err := gsqbm.Conn.Begin()

if err != nil {

return fmt.Errorf("could not start a new transaction: %w", err)

}

insertStatement := fmt.Sprintf("INSERT INTO contacts(first_name,last_name,email) VALUES %s", strings.Join(placeholders, ","))

_, err = txn.Exec(insertStatement, vals...)

if err != nil {

txn.Rollback()

return fmt.Errorf("failed to insert multiple records at once: %w", err)

}

if err := txn.Commit(); err != nil {

return fmt.Errorf("failed to commit transaction: %w", err)

}

}

return nil

}

Now, you may have noticed we made a slight modification to this one(compared to our previous post), this is because we want to have the ability to control the BATCH_SIZE to respect the limit of 65.535 parameters of postgres for prepared statements, and also to use the same value for a fair comparison.

This method, takes the given contacts and groups them in groups of contacts(a slice of slices) to then, insert each group accordingly in batches. For those of you who are curious, this is how SplitInGroups looks like:

type Contact struct {

FirstName string

LastName string

Email string

}

type Contacts []Contact

func (c Contacts) SplitInGroups(groupSize int) []Contacts {

if len(c) <= groupSize {

return []Contacts{c}

}

var contactGroups []Contacts

for i := 0; i < len(c); i += groupSize {

end := i + groupSize

if end > len(c) {

end = len(c)

}

contactGroups = append(contactGroups, c[i:end])

}

return contactGroups

}

Now, this approach can be considered verbose and hard to follow, especially when looking at the bit where we formulate the placeholder for our insert statement.

Let’s take a look at the same elements but on the other side of the coin.

The GORM Way

GORM stands as a popular Object-Relational Mapping [3] (ORM) package for the Go programming language, offering a comprehensive range of features, including, associations, hooks, preloading, and transactions. It streamlines the process of defining relationships between different models, simplifying the linking of related data across multiple tables[4].

Additionally, GORM provides robust migration support, enabling developers to define and implement changes to the database schema using Go, ensuring seamless transitions and updates in database structures.

That being said, let’s see how our setup function looks for GORM:

func setup() (*gorm.DB, error) {

dbUser := os.Getenv("DB_USER")

dbPass := os.Getenv("DB_PASSWORD")

dbHost := os.Getenv("DB_HOST")

dbPort := os.Getenv("DB_PORT")

dbName := os.Getenv("DB_NAME")

Conn, err := gorm.Open(postgres.New(postgres.Config{

DSN: fmt.Sprintf("host=%s user=%s password=%s dbname=%s port=%s sslmode=disable", dbHost, dbUser, dbPass, dbName, dbPort),

}), &gorm.Config{

CreateBatchSize: internal.BatchSize,

})

if err != nil {

return nil, fmt.Errorf("Could not open a connection to the database: %s", err.Error())

}

return Conn, nil

}

Data Source Name(DSN), is essentially a string of information that a database client uses to establish a connection to a database. It contains all the necessary details such as the type of database, the server location, the database name, the user credentials, and other connection parameters.

Similar to the go/sql approach, opening our connection to the database using is straightforward. We just need to assemble our DSN(we used a DB URL in the other approach) and use it to initialize a new connection to our database through GORM.

In the same way as the other approach, we will be using a function to summon our batch manager inside our benchmark, and that function looks as follows:

type gormBatchManager struct {

Conn *gorm.DB

}

func NewGormBatchManager() (gormBatchManager, error) {

conn, err := setup()

if err != nil {

return gormBatchManager{}, fmt.Errorf("failed to start a gorm batch manager: %w", err)

}

return gormBatchManager{conn}, nil

}

Last but not least, the following is the method that we will be using to persist contacts in batches using GORM:

func (gbm gormBatchManager) SaveContactsInBatches(c any) error {

if gbm.Conn.Create(c); gbm.Conn.Error != nil {

return fmt.Errorf("gorm: failed to insert records in batches: %w", gbm.Conn.Error)

}

return nil

}

As you can tell, it requires less code and it does not need any additional operation on top of the given data to save the records. The size of our batches is controlled by the CreateBatchSize field, that’s set when initializing our database connection with GORM. Compared to the go/sql approach this one is simpler to follow yet quite effective.

Now that we have seen both sides of the coin, let’s see what our benchmark tests look like.

Running Our Benchmarks

If you’d like to follow along, you will need to setup your database first. We’ve made this a bit easier for you this time. Instead of having to run SQL commands directly on the database, you just need to run the following command:

$ go run cmd/setup/main.go

You will need to have PSQL installed to run this one.

This will tell our program to connect to our local Postgres database and run the two SQL scripts we have in our cmd/setup/migrations folder.

Now, let’s see how our benchmark tests look like:

func BenchmarkSaveContactsInBatches(b *testing.B) {

gbm, err := gorman.NewGormBatchManager()

if err != nil {

b.Fatal(err)

}

gbm.Conn.Exec("TRUNCATE contacts;")

prepareTestCases()

b.ResetTimer()

for _, tcase := range tcases {

b.Run(fmt.Sprintf("records_number_%d", tcase.RecordsToCreate), func(b *testing.B) {

for i := 0; i < b.N; i++ {

err := gbm.SaveContactsInBatches(tcase.Contacts)

if err != nil {

b.Fatal(err.Error())

}

}

})

}

}

Bear in mind, both benchmark tests are pretty much the same in terms of setup, execution and teardown, with the only differences being the type of batch manager used and the type of connection to our database.

First, we setup everything we need for our test by initializing the batch manager. This will also initialize our connection to the database. Then, we run a truncate on the contacts table to clear any records we may have created in previous runs.

Next, we run the prepareTestCases function, to generate as many fake records as we need an keep them in memory in a variable that holds the number of records we want to create for each test scenario. To better understand this, let’s see how that function looks:

func generateDummyContacts(numberOfContacts int) Contacts {

var contacts Contacts

for i := 0; i < numberOfContacts; i++ {

contacts = append(contacts, Contact{

FirstName: fake.FirstName(),

LastName: fake.LastName(),

Email: fake.EmailAddress(),

})

}

return contacts

}

func prepareTestCases() {

for i := range tcases {

tcases[i].Contacts = generateDummyContacts(tcases[i].RecordsToCreate)

}

}

And, here’s the variable that holds the test cases we are going to be running for our benchmarks(also known as tcases):

var tcases = []struct {

RecordsToCreate int

Contacts internal.Contacts

}{

{RecordsToCreate: 100},

{RecordsToCreate: 1_000},

{RecordsToCreate: 10_000},

{RecordsToCreate: 100_000},

{RecordsToCreate: 300_000},

{RecordsToCreate: 500_000},

{RecordsToCreate: 1_000_000},

}

Now, going back to our benchmark, the next thing we will be calling is b.ResetTimer. This is because we don’t want our setup step to be taken into account when running our test, and this function zeroes the elapsed benchmark time and memory allocation counts.

Since we’re running this test with multiple inputs, we call the Run method of testing.B to perform sub-benchmark for each one.

Now that we are aware of those elements, we can use the following command to run our benchmark test:

$ GOMAXPROCS=2 go test -bench=Batches -timeout 30m -count 6 -benchtime=20x ./internal/benchmark | tee results/gorm-bench.txt

Both files can be found inside the results folder. These are the ones you can use to see the comparison using benchstat.

GOMAXPROCS will tell the test suite to utilize two CPU cores to perform the benchmarks. We’re also passing a timeout of 30m since we’re inserting quite the amount of records and don’t want our benchmark to be interrupted(It’s 11m by default).

With the -count flag, we’re asking the benchmark to run each scenario six times, each will have 20 loops to be executed, which is specified through the benchtime flag.

The tee command will help us see the results of our benchmarks while saving the results to a text file, so we can pass it to benchstat for the comparison.

The Results

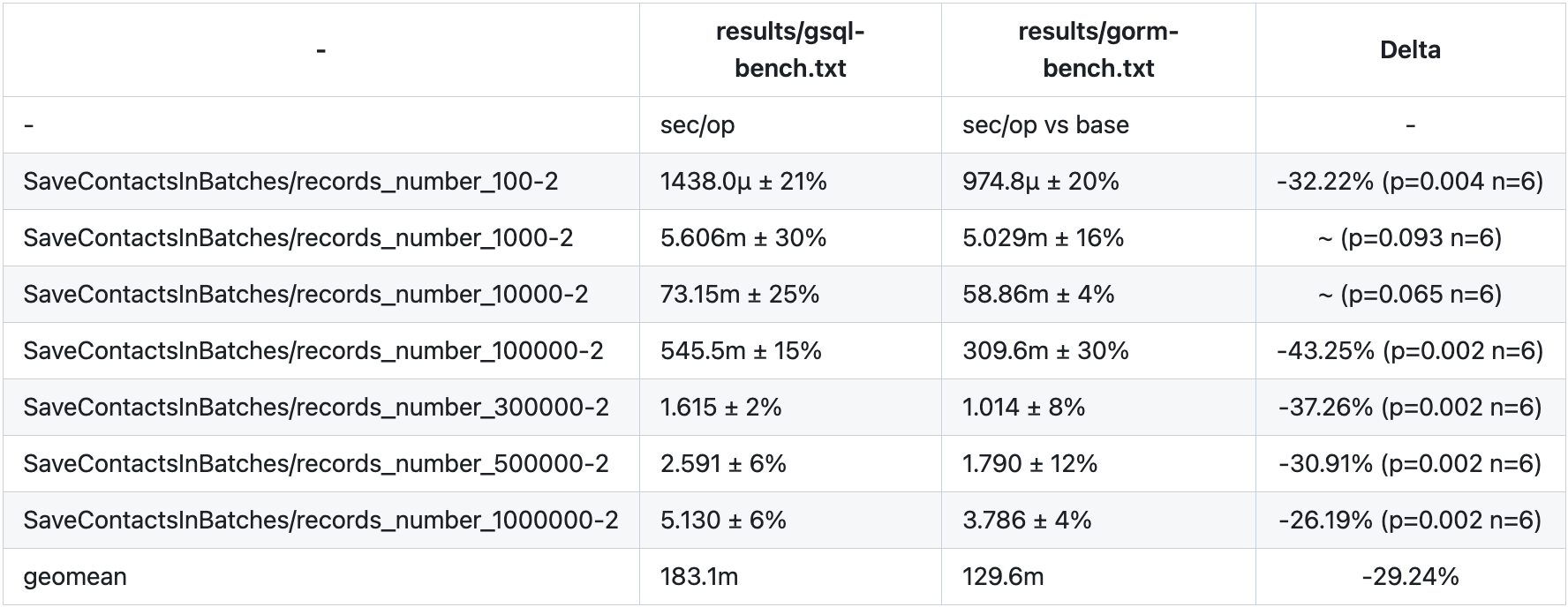

Once we run both benchmark tests, we can use benchstat to compare both runs, which should lead us to the following results:

The benchstat results consist of several key components, including:

- Time/op: This denotes the mean time taken for a specific benchmark operation.

- Delta: This indicates the percentage change in performance between two benchmarks.

- P-value: A statistical measure that evaluates the likelihood of differences being the result of random chance. N: The number of valid samples considered in the comparison.

When examining the results of benchstat, it’s important to note that a negative percentage in the delta column means that there has been an improvement, while a positive percentage indicates that there has been a decrease in performance. A low p-value implies that the differences are not likely due to chance, indicating that the performance change is statistically significant. To ensure reliability in comparisons, it’s important to consider the sample size (n).

In summary, the GORM way consistently outperforms the go/sql way across various record numbers, with significant speed improvements in most cases. The geomean also indicates a notable performance advantage for GORM over the go/sql approach.

Closing Thoughts

Comparing the first approach which uses the database/sql package and this one it’s kind of like comparing manual assembly to using a ready-made tool. The code employed on the first approach was hard to follow and some constraints as the parameter limit(65.535) aren’t exactly visible during build time.

With GORM, the process of performing batch inserts into a database becomes much simpler and easier to comprehend. Moreover, GORM provides additional flexibility by allowing us to configure the batch size according to our needs. Not to mention, the other features that we’ll be adding to our toolbelt by incorporating GORM in our Go applications.

Remember, sometimes the best solution is not always the one we’re accustomed to, but rather the one that balances performance, readability, and scalability to meet the unique demands of our growing applications.